It was an arena for academics working on artificial intelligence (AI) and facial recognition: the commercialization of the Internet. About ten, fifteen years ago, there were all kinds of photo services like Flickr, where everyone could publish their own photos. Good for photographers, but good for those researchers. Fallen in a swoop, they had access to an unimaginable amount of photographs to train their facial recognition system.

With the increase in computing power, the presence of data has been the basic state of flight taken by AI. We already think that it is normal for a surveillance camera or self-driving car to separate a person from a garbage bag, but before they get to that point, they are given vast amounts of pictures with labels : It’s a person, it’s a trash, it’s a street sign.

Around the turn of the century, researchers danced to joy when a network selected one from a collection of one hundred pictures. And ten years later, researchers were still “in Teletubbieland”, says Cees Snoek, professor of intelligent sensory information systems at the University of Amsterdam. It has been running fast ever since, thanks to an avalanche of training footage. For example, a few years ago Google’s FaceNet was able to pinpoint the right name from a collection of 5,000 images with celebrities in 99.6 percent of cases. He was even better than human experts.

Photodatabase

But there is a problem with fine databases of all images that have been created in recent years by universities and the business community. Not with pictures of objects, but with pictures of people. Because no one has ever explicitly given permission for training facial recognition systems to end up in a photo database.

The AI system, the most well-known dataset in the world with images for ImageNet training, has recently taken measures to comply with all privacy regulations. ImageNet is primarily intended as a repository for images of objects, but as a sub-catch it also contains images of people. They have now been blurred to make them inaccessible.

The ImageNet team promises in one Recent publication Foggy faces across the board cause “slight loss in accuracy”. This includes, for example, recognizing a chair in a room where a person is also located. In specific cases (a mask, a harmonica) where the object is usually close to one face, the problems may be somewhat greater.

Non-existent person

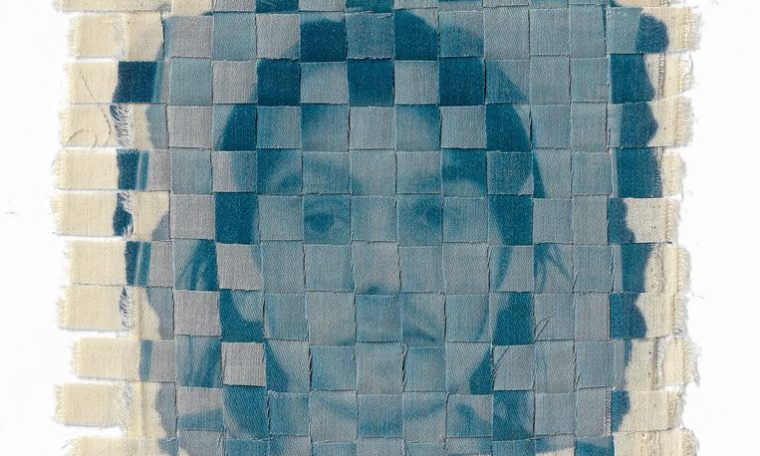

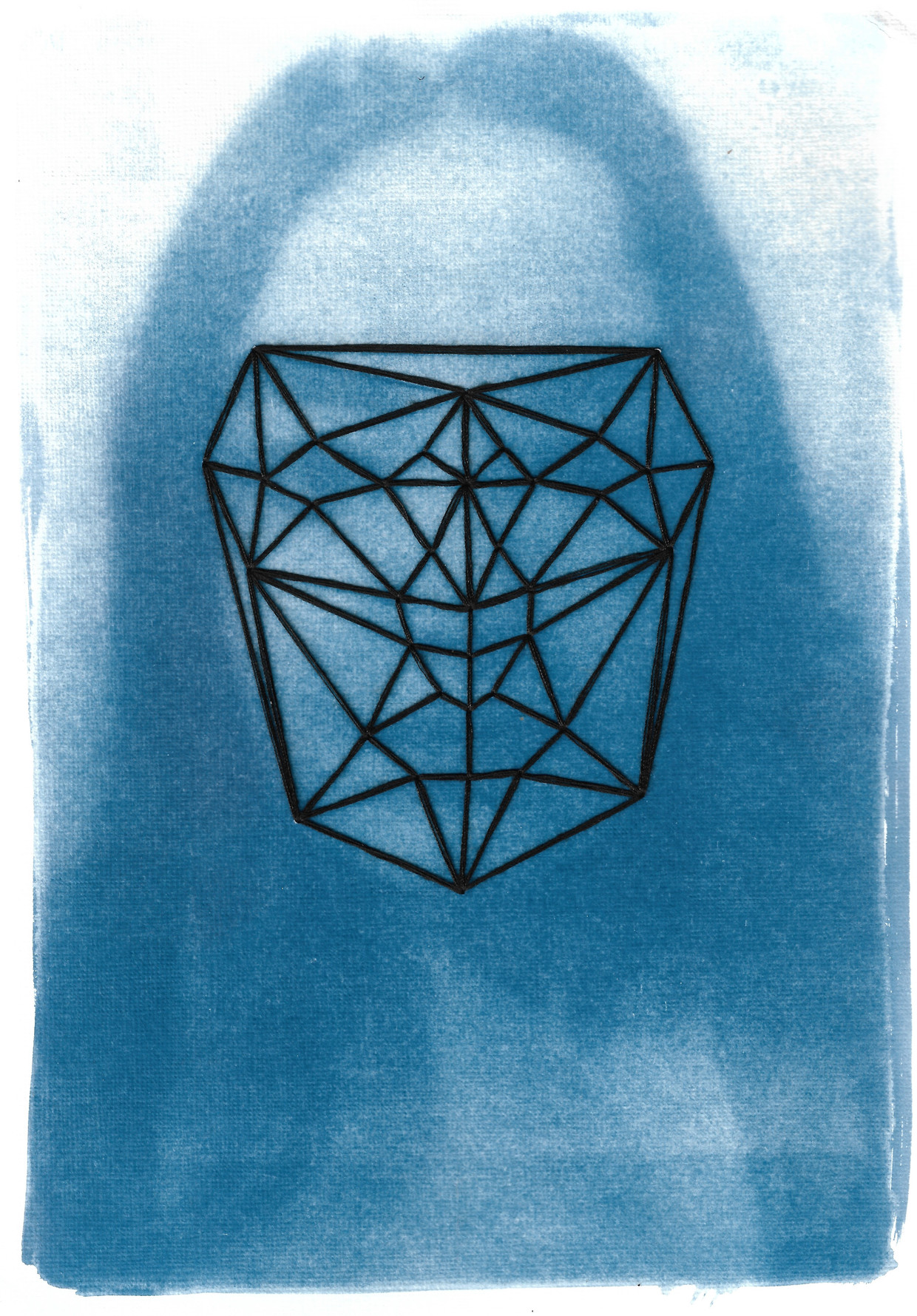

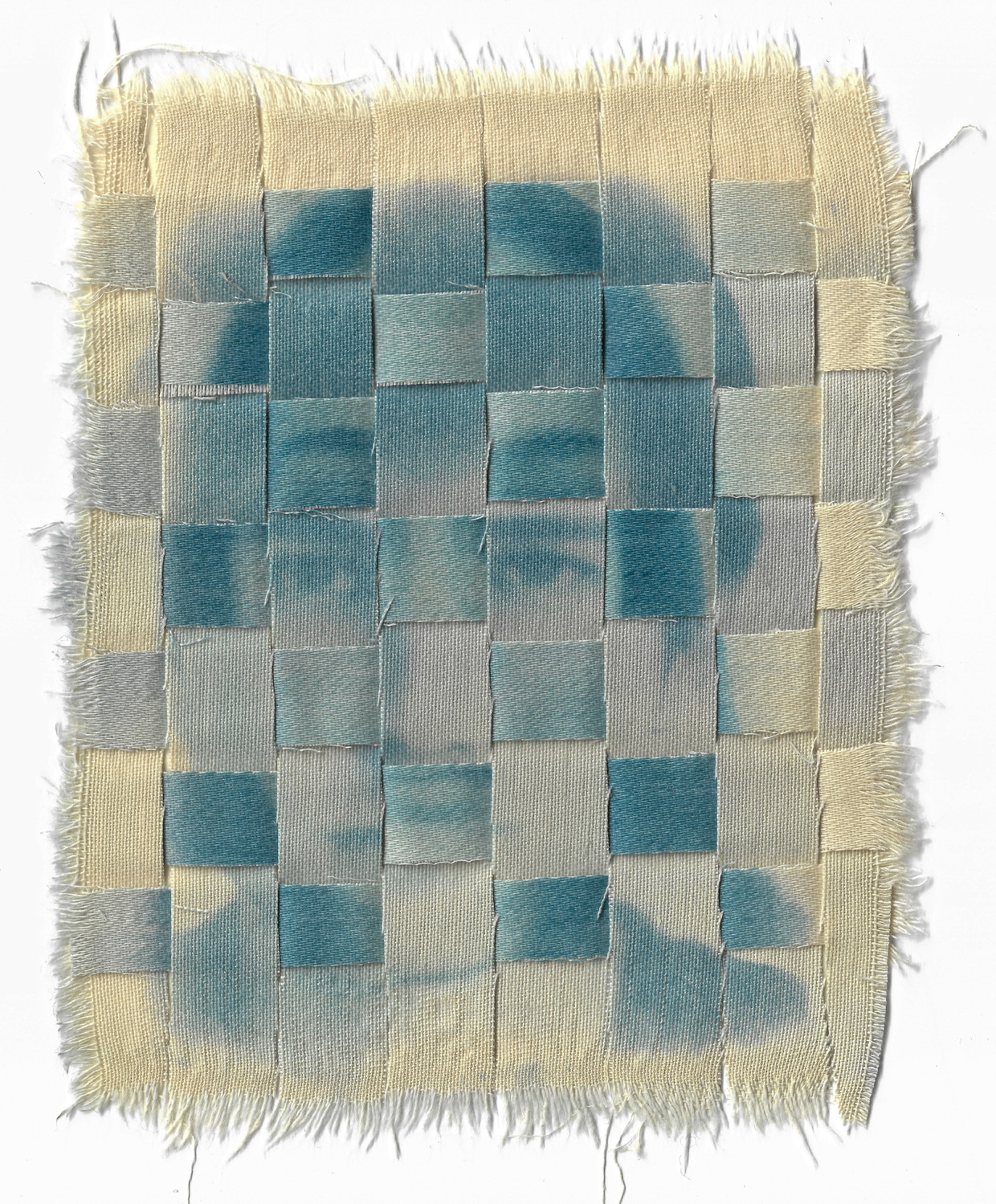

There are more ways to meet modern privacy concerns. Block for a face, for example, or ‘pixel’. The most interesting method is at the same time the most radical. Namely: not to make existing pictures recognizable, but to depict the lifetimes of non-existent ones. With the so-called gana (Genetic adverse network) it’s a piece of cake. A publicly accessible site such as ThisPersonDoesNotExist, which makes non-stop photos of non-existent people (the so-called DeepFake), uses such networks.

Together with the staff of the University of Amsterdam, the Eindhoven University of Technology and the Eindhoven company ViNotion (specialized in smart surveillance), Snoek investigated all these types of methods for their effectiveness. The conclusion: Facial recognition systems perform better with artificial faces of GANs with blurred, checkered, or pixelated faces. Snowyck is pleased with the (empirical) results: “You can change faces in training data with artificial faces without significantly impacting the quality of detection.”

He calls this “good news” to companies involved in face detection and who have to comply with European privacy law: “Deepfake technology allows you to store image data without harming people’s privacy.”

According to Snoke, the ImageNet solution (damping) works fine in applications where a system has to eject a certain object: where is the hammer, where is the bottle? But in situations in which the face is actually involved, it is less appropriate, they fear. Snoke cites the self-driving car as an example, which has to be estimated when to brake for pedestrians. “In that case, the faces are relevant. Not really people with blurred faces. In other words: the closer you get to reality with your dataset, the better the result. Deepfeck may be artificial, but they look like two drops of water on a real face.

Privacy rules

This is only a first step: “If you optimize a detector and state that it is working with DeepFac, it can perform even better.” Snoke sees a number of applications where it can come in handy: from self-driving cars and video surveillance to healthcare, where automated systems can monitor if someone has fallen and therefore need help.

Necessary adjustments are now being made due to European privacy regulations, but Snoke sees even more benefits of using Deepfake. One of the major problems encountered in recent years is the imbalance of training data, with a greater prevalence of white people. Snoke: “This effect is carried forward by algorithms, which go one step further.” For example, it may be that the ‘system’ favors white men in job applications or does not recognize that a black man was standing in front of an entrance.

By using DeepFac, the quality of the dataset can also be improved, Snoke hopes. Poor quality – in this case the presence of sexist and racist labels with photos – Tiny images of MIT, the database’s 80 million well-known research institute, had already completely led to this. Was taken offline.

Deepfeck has often been negative in recent reports about divestment, but it is clear to Snoke that the benefits can be great as well. It depends on how they are used.